幻核为什么关闭?详细解析幻核关闭的原因

就在前几天,腾讯的幻核作为国内头部数字藏品交易平台发布了公告,称其将停止数字藏品发行,而所有的用户可自行选择继续持有已购买的数字藏品或是发起退款申请。其实这次的行动并不是一个突袭,事情会发展成这样也是有

2023-03-20 11:47

2023-03-20 11:47

智能合约有什么作用?能实现哪些功能?

在大多数情况下,智能合约基于所谓的“if-then”函数,一旦某个事件发生并且某些信息可用,合约就会自动执行规定的步骤,整个过程都不需要人为干预。智能合约可以说是有各种各样的应用领域,要知道近年来加密领

2023-03-16 13:40

2023-03-16 13:40

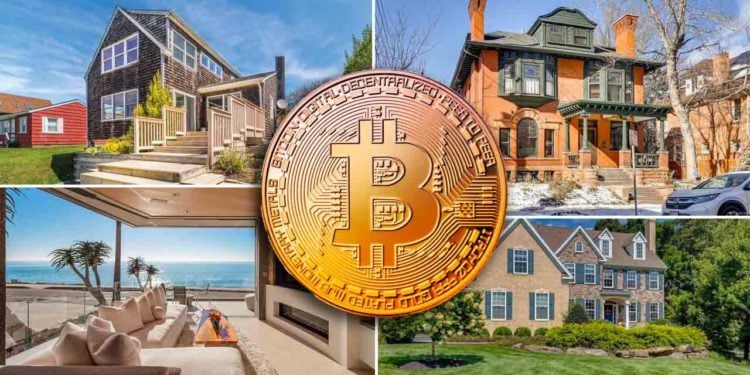

投资型DAO是什么意思?一文读懂投资型DAO

区块链的去中心化能力在许多领域带来了巨大的变化,例如投资和其他金融服务。今天为大家介绍的这个投资DAO是一个常规的去中心化自治组织或DAO,它致力于代表社区成员筹集资金并将其投资到不同的资产中。现在,初创企业和企业家不必等待风险投资公司

2023-03-08 21:37

2023-03-08 21:37